Every day people are faced with the use of electronic devices. Modern life is impossible without them. After all, we are talking about TV, radio, computer, telephone, multicooker and so on. Previously, just a few years ago, no one thought about what signal was used in each working device. Now the words “analog”, “digital”, “discrete” have been around for a long time. Some types of signals listed are of high quality and reliable.

Digital transmission came into use much later than analogue. This is due to the fact that such a signal is much easier to maintain, and the technology at that time was not so improved.

Every person encounters the concept of “discreteness” all the time. If you translate this word from Latin, it will mean “discontinuity.” Delving far into science, we can say that a discrete signal is a method of transmitting information, which implies a change in time of the carrier medium. The latter takes any value from all possible. Now discreteness is fading into the background, after the decision was made to produce systems on a chip. They are holistic, and all components closely interact with each other. In discreteness, everything is exactly the opposite - each detail is completed and connected to others through special communication lines.

Signal

A signal is a special code that is transmitted into space by one or more systems. This formulation is general.

In the field of information and communications, a signal is a special data carrier that is used to transmit messages. It can be created, but not accepted; the latter condition is not necessary. If the signal is a message, then “catching” it is considered necessary.

The described data transmission code is specified by a mathematical function. It characterizes all possible changes in parameters. In radio engineering theory, this model is considered basic. In it, noise was called an analogue of the signal. It represents a function of time that freely interacts with the transmitted code and distorts it.

The article describes the types of signals: discrete, analog and digital. The basic theory on the topic described is also briefly given.

Digital audio processing methods

Digital audio is processed using mathematical operations that are applied to individual audio samples or to groups of them of varying lengths. Mathematical operations can simulate traditional analog processing tools (mixing, addition, amplification or attenuation, modulation, etc.) or alternative methods - spectral decomposition of a signal, correction of frequency components with reverse “assembly” of signals.

Digital signal processing can be linear (carried out in real time on live audio) or nonlinear (carried out on previously recorded audio). Sounds are processed by universal general-purpose processors (Intel 8035, 8051, 80×86, Motorola 68xxx, SPARC) or specialized digital signal devices Analog Devices ADSP-xxxx, Texas Instruments TMS xxx, Motorola 56xxx, etc.

Digital signal processing methods are as follows:

- linear filtration;

- spectrum analysis;

- time and frequency analysis;

- adaptive filtering;

- nonlinear type processing;

- multi-speed processing;

- convolution;

- sectional convolution.

As you can see, even simple analog sound can be of high quality. To do this, you need very little - just be able to format and convert it. And to learn this art, you can take a special training course.

Types of signals

There are several types of classification of available signals. Let's look at what types there are.

- Based on the physical medium of the data carrier, they are divided into electrical, optical, acoustic and electromagnetic signals. There are several other species, but they are little known.

- According to the method of setting, signals are divided into regular and irregular. The first are deterministic methods of data transmission, which are specified by an analytical function. Random ones are formulated using the theory of probability, and they also take on any values at different periods of time.

- Depending on the functions that describe all signal parameters, data transmission methods can be analog, discrete, digital (a method that is quantized in level). They are used to power many electrical appliances.

Now the reader knows all types of signal transmission. It won’t be difficult for anyone to understand them; the main thing is to think a little and remember the school physics course.

Basic audio file formats

In fact, there are a lot of formats with which you can read audio files. But there are those that have received universal recognition. All of them are divided into three groups:

- uncompressed audio formats;

- with lossless compression;

- with lossy compression.

Let's look at the main audio file formats:

- WAV is the first audio format that could be processed by computer programs at a high professional level. Disadvantage: recording takes up too much space.

- CDs - The .cda extension cannot be edited, but it can be reformatted and saved with any audio processing program.

- MP3 codec is a universal format that compresses audio files as much as possible.

- AIFF files - the format supports monophonic and stereophonic data of 8 and 16 bits in size, was originally developed for the Macintosh, but after additional development it can be used on other OS platforms.

- OGG is a popular format, but it has disadvantages such as the use of its own codecs and decoders and overloading the computer's system resources.

- AMR is a low-quality audio format.

- The MIDI format allows you to edit a recording by pressing keys, changing tempo, key, pitch, and adding effects.

- FLAC is a format that reproduces audio in high quality.

Why is the signal processed?

The signal is processed in order to transmit and receive information that is encrypted in it. Once it is extracted, it can be used in a variety of ways. In some situations it will be reformatted.

There is another reason for processing all the signals. It consists of a slight compression of frequencies (so as not to damage the information). After this, it is formatted and transmitted at slow speeds.

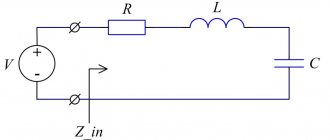

Analog and digital signals use special techniques. In particular, filtering, convolution, correlation. They are necessary to restore the signal if it is damaged or has noise.

DSD format

After the widespread use of delta-sigma DACs, it was quite logical for the emergence of a format for recording binary code directly to delta-sigma encoding.

This format is called DSD (Direct Stream Digital). The format was not widely used for several reasons. Editing files in this format turned out to be unnecessarily limited: you cannot mix streams, adjust volume, or apply equalization. This means that without loss of quality, you can only archive analog recordings and produce two-microphone recording of live performances without further processing. In a word, you can’t really make money.

In the fight against piracy, SA-CD format discs were not (and are still not) supported by computers, which makes it impossible to make copies of them. No copies – no wide audience. It was possible to play DSD audio content only from a separate SA-CD player from a proprietary disc. If for the PCM format there is an SPDIF standard for digital data transfer from a source to a separate DAC, then for the DSD format there is no standard and the first pirated copies of SA-CD discs were digitized from the analog outputs of SA-CD players (although the situation seems stupid, but in reality some recordings were released only on SA-CD, or the same recording on Audio-CD was deliberately made of poor quality to promote SA-CD).

The turning point occurred with the release of SONY game consoles, where the SA-CD disc was automatically copied to the console’s hard drive before playback. Fans of the DSD format took advantage of this. The appearance of pirated recordings stimulated the market to release separate DACs for playing DSD streams. Most external DACs with DSD support today support USB data transfer using the DoP format as a separate encoding of the digital signal via SPDIF.

Carrier frequencies for DSD are relatively small, 2.8 and 5.6 MHz, but this audio stream does not require any data reduction conversion and is quite competitive with high-resolution formats such as DVD-Audio.

There is no clear answer to the question of which is better, DSP or PCM. It all depends on the quality of implementation of a particular DAC and the talent of the sound engineer when recording the final file.

Dynamic range

The signal range is calculated by the difference between the higher and lower volume levels, which are expressed in decibels. It completely depends on the work and the characteristics of the performance. We are talking about both musical tracks and ordinary dialogues between people. If we take, for example, an announcer who reads the news, then his dynamic range fluctuates around 25-30 dB. And while reading any work, it can rise to 50 dB.

Sound - what is it?

Sound is a physical phenomenon. These are elastic waves of mechanical vibrations propagating in a gaseous, solid or liquid medium. Sound is often considered to be those vibrations that are perceived by animals and people. The main characteristics of sound are amplitude and frequency spectrum. For people, the second indicator ranges from 16-20Hz – 15-20 kHz. Everything below this range is called infrasound, above - ultrasound (up to 1 GHz) or hypersound (from 1 GHz). The loudness of the sound determines the sound pressure and its effectiveness, the shape of the vibrations and their frequency, but the pitch of the sound depends on the magnitude of the sound pressure and frequency.

Analog signal

An analog signal is a time-continuous method of data transmission. Its disadvantage is the presence of noise, which sometimes leads to a complete loss of information. Very often situations arise that it is impossible to determine where the important data is in the code and where there are ordinary distortions.

It is because of this that digital signal processing has gained great popularity and is gradually replacing analog.

How to receive digital TV

Reception of a digital signal is possible if the TV has a built-in receiver that supports the following formats:

- DVB-T2 – for receiving over-the-air signals.

- DVB-C, DVB-C2 – for connecting to cable television.

- DVB-S, DVB-S2 – for reception via satellite dish.

Another way to get a high-quality digital signal is to connect to the Internet. To do this, the TV must have a Wi-Fi or LAN input and support Smart mode.

If the TV meets the listed parameters, the only thing left is the settings. You need to set the signal source - cable, antenna or Wi Fi (LAN). Then switch to searching for digital channels and wait for the result. Otherwise, you will have to purchase the appropriate attachment. Most likely, you can use an old one as an antenna, which picked up an analog signal. In rare exceptions, you have to tune it exactly to the television center or buy a new one. Especially for such cases, we have prepared an article about which antenna to choose for digital TV.

Digital signal

A digital signal is a special data stream; it is described by discrete functions. Its amplitude can take on a certain value from those already specified. If an analog signal is capable of arriving with a huge amount of noise, then a digital signal filters out most of the received noise.

In addition, this type of data transmission transfers information without unnecessary semantic load. Several codes can be sent at once through one physical channel.

There are no types of digital signal, since it stands out as a separate and independent method of data transmission. It represents a binary stream. Nowadays, this signal is considered the most popular. This is due to ease of use.

Advantages of digital over analog

Analog television has accompanied us for many years. To simply generate, transmit and receive data, relatively cheap transmitters and receivers are needed. It would seem, what more could you want? But, on the path of electromagnetic waves there are always obstacles and interference. An example would be how fog interferes with a person's visual perception of the world. For analogue broadcasting, fog can be caused by industrial electromagnetic radiation, other radio signals, terrain, magnetic storms in the sun, as well as natural signal attenuation. Therefore, analog information often arrives at receivers in a distorted form. This results in poor sound and distorted, noisy images.

The digital signal has obvious advantages over the analog signal. Analogue images and sound are first encoded and only then transmitted as digital data, which cannot be distorted. If the digital signal reaches the receiver, then excellent transmission quality is guaranteed.

call me back

Leave your contact details and our manager will contact you within one business day

As a comparison, we can cite old telephone exchanges and modern cellular communications. In the first case it was analog, in the second a digital signal is used. On a regular phone you could barely hear the caller on the other side of the city, but on a cell phone you could have a great conversation with someone on another continent. The same thing happened with television. Thanks to coding, information reaches receivers unchanged. Therefore, the TV reproduces high-quality, unchanged picture and sound.

Analogue television is outdated and does not meet modern requirements. On the contrary, digital, having just appeared, has surpassed analog in all respects. The volume of transmitted data is growing, due to this the quality of the picture and sound is improving, and the number of channels is increasing.

Application of digital signal

How does a digital electrical signal differ from others? The fact that he is capable of performing complete regeneration in the repeater. When a signal with the slightest interference arrives at communication equipment, it immediately changes its form to digital. This allows, for example, a TV tower to generate a signal again, but without the noise effect.

If the code arrives with large distortions, then, unfortunately, it cannot be restored. If we take analog communications in comparison, then in a similar situation a repeater can extract part of the data, spending a lot of energy.

When discussing cellular communications of different formats, if there is strong distortion on a digital line, it is almost impossible to talk, since words or entire phrases cannot be heard. In this case, analog communication is more effective, because you can continue to conduct a dialogue.

It is precisely because of such problems that repeaters form a digital signal very often in order to reduce the gap in the communication line.

How DACs build a wave

A DAC is a digital-to-analog converter, an element that converts digital sound into analog. We will look superficially at the basic principles. If the comments indicate an interest in considering a number of points in more detail, a separate material will be released.

Multibit DACs

Very often, a wave is represented as steps, which is due to the architecture of the first generation of multi-bit R-2R DACs, which operate similarly to a relay switch.

The DAC input receives the value of the next vertical coordinate and at each clock cycle it switches the current (voltage) level to the appropriate level until the next change.

Although it is believed that the human ear can hear no higher than 20 kHz, and according to Nyquist theory it is possible to restore the signal up to 22 kHz, the question remains about the quality of this signal after restoration. In the high-frequency region, the resulting “stepped” waveform is usually far from the original one. The easiest way out of the situation is to increase the sampling frequency when recording, but this leads to a significant and undesirable increase in file size.

An alternative is to artificially increase the DAC playback sampling rate by adding intermediate values. Those. we imagine a continuous wave path (gray dotted line) smoothly connecting the original coordinates (red dots) and add intermediate points on this line (dark purple).

When increasing the sampling frequency, it is usually necessary to increase the bit depth so that the coordinates are closer to the approximated wave.

Thanks to intermediate coordinates, it is possible to reduce the “steps” and build a wave closer to the original.

When you see a boost function from 44.1 to 192 kHz in a player or external DAC, it is a function of adding intermediate coordinates, not restoring or creating sound in the region above 20 kHz.

Initially, these were separate SRC chips before the DAC, which then migrated directly to the DAC chips themselves. Today you can find solutions where such a chip is added to modern DACs, this is done in order to provide an alternative to the built-in algorithms in the DAC and sometimes get even better sound (as for example, this is done in the Hidizs AP100).

The main refusal in the industry from multibit DACs occurred due to the impossibility of further technological development of quality indicators with current production technologies and the higher cost compared to “pulse” DACs with comparable characteristics. However, in Hi-End products, preference is often given to old multi-bit DACs rather than new solutions with technically better characteristics.

Switching DACs

At the end of the 70s, an alternative version of DACs based on a “pulse” architecture – “delta-sigma” – became widespread. Pulse DAC technology enabled the emergence of ultra-fast switches and allowed the use of high carrier frequencies.

The signal amplitude is the average value of the pulse amplitudes (pulses of equal amplitude are shown in green, and the resulting sound wave is shown in white).

For example, a sequence of eight cycles of five pulses will give an average amplitude (1+1+1+0+0+1+1+0)/8=0.625. The higher the carrier frequency, the more pulses are smoothed and a more accurate amplitude value is obtained. This made it possible to present the audio stream in one-bit form with a wide dynamic range.

Averaging can be done with a regular analog filter, and if such a set of pulses is applied directly to the speaker, then at the output we will get sound, and ultra high frequencies will not be reproduced due to the high inertia of the emitter. PWM amplifiers work on this principle in class D, where the energy density of pulses is created not by their number, but by the duration of each pulse (which is easier to implement, but cannot be described with a simple binary code).

A multibit DAC can be thought of as a printer capable of applying color using Pantone inks. Delta-Sigma is an inkjet printer with a limited range of colors, but due to the ability to apply very small dots (compared to an antler printer), it produces more shades due to the different density of dots per unit surface.

In an image, we usually do not see individual dots due to the low resolution of the eye, but only the average tone. Likewise, the ear does not hear impulses individually.

Ultimately, with current technologies in pulsed DACs, it is possible to obtain a wave close to what should theoretically be obtained when approximating intermediate coordinates.

It should be noted that after the advent of the delta-sigma DAC, the relevance of drawing a “digital wave” in steps disappeared, because This is how modern DACs do not build a wave in steps. It is correct to construct a discrete signal with dots connected by a smooth line.

Are switching DACs ideal?

But in practice, not everything is rosy, and there are a number of problems and limitations.

Because Since the overwhelming number of records are stored in a multi-bit signal, conversion to a pulse signal using the “bit to bit” principle requires an unnecessarily high carrier frequency, which modern DACs do not support.

The main function of modern pulse DACs is to convert a multi-bit signal into a single-bit signal with a relatively low carrier frequency with data decimation. Basically, it is these algorithms that determine the final sound quality of pulse DACs.

To reduce the problem of high carrier frequency, the audio stream is divided into several one-bit streams, where each stream is responsible for its bit group, which is equivalent to a multiple of the carrier frequency of the number of streams. Such DACs are called multibit delta-sigma.

Today, pulsed DACs have received a second wind in high-speed general-purpose chips in products from NAD and Chord due to the ability to flexibly program conversion algorithms.

Discrete signal

Nowadays, every person uses a mobile phone or some kind of “dialer” on their computer. One of the tasks of devices or software is to transmit a signal, in this case a voice stream. To carry a continuous wave, a channel is required that has the highest level of throughput. That is why the decision was made to use a discrete signal. It does not create the wave itself, but its digital appearance. Why? Because the transmission comes from technology (for example, a telephone or computer). What are the advantages of this type of information transfer? With its help, the total amount of transmitted data is reduced, and batch sending is also easier to organize.

The concept of “sampling” has long been steadily used in the work of computer technology. Thanks to this signal, not continuous information is transmitted, which is completely encoded with special symbols and letters, but data collected in special blocks. They are separate and complete particles. This encoding method has long been relegated to the background, but has not disappeared completely. It can be used to easily transmit small pieces of information.

Sampling frequency and bit depth

These two concepts are often discussed when describing digital recording devices. So, the sampling rate means the frequency at which the recording device samples the frequency of input signals. When analog audio is converted to digital, it is recorded as individual samples, that is, signal intensity values at specific time periods.

The sampling frequency most often has the following standard values:

To obtain the best quality digital recording, you should use a higher sampling rate: due to the greater number of samples per second of time, the quality of the converted sound improves.

What is bit depth? When it comes to recording devices, we often hear units of information such as 16 bits, 24 bits, etc. They denote the number of units of information that can be used to represent the value of samples obtained during digital recording (and each sample separately). In this case, the higher the unit of measurement, the higher the quality of the resulting sound. However, it is worth considering that the value of sound intensity does not depend on the number of bits, but on the accuracy of its representation.